Reddit might be one of the most popular sites on the internet – the front page of the internet if you will – but it looks as if it might be responsible for the downfall of society as it has literally managed to turn an AI into a psychopath.

Featured Image VIA

You’re probably wondering how the hell this happened and if you surmised that it might have something to do with some scientists who were too smart for their own good then you would definitely be correct. It’s all down to a bunch of quacks from MIT named Pinar Yanardag, Manuel Cebrian and Iyad Rahwan who wanted to see if it was possible if they could turn an AI into a psychopath.

That’s right, they actually wanted to see if that was possible – let that sink in for a minute. Don’t worry about the possible apocalyptic ramifications of your research there guys. Have you even seen Terminator?

Anyway, in order to do this they developed a deep learning technique that used the AI to translate images into writing. Instead of using normal or happy images though, they trawled Reddit for some of the most messed up stuff they could find and fed that to the robot in order to turn it into a psychopath. They decided to name him Norman.

Image VIA

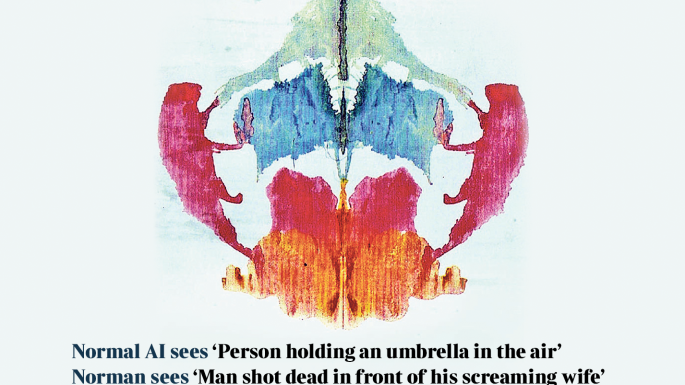

But how did they know it was a psychopath? The inkblot test of course.

They showed the AI a bunch of inkblots and the normal AI saw a closeup picture of a vase with flowers, but the psycho AI replied that it saw a man being shot dead. The next one yielded the result of a close up of a wedding cake on a table or a man killed by a speeding driver. Finally, the normal AI saw ‘a person holding an umbrella in the air’ while the Norman saw ‘man is shot dead in front of his screaming wife. You get the picture.

We’re always talking about robots taking over the world following several leaps and bounds in technology, but this could be the day when I knew that we are all completely and utterly screwed. I hope they killed that AI after the experiment or at least locked him up somewhere. Guy sounds completely and utterly dangerous.

For more AI, check out when Microsoft’s first AI turned into a racist robot. More worrying signs.